CrackNet, a deep learning model for segmentation in crack detection.

This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.

It is really interesting to apply deep learning into some unrelated field. Definitely unrelated, just like my major civil engineering during my undergraduate study. I was lucky to find an interdisciplinary area to combine civil and what actually interested me, deep learning and vision. I am wondering whether I was truly attracted by the beauty of machine learning algorithm and the mystery behind the neural net models or just due to the promising salary(seems to be). Anyway, this research is quite fun and give me some practice. Let’s go to the topic crack segmentation and the model CrackNet.

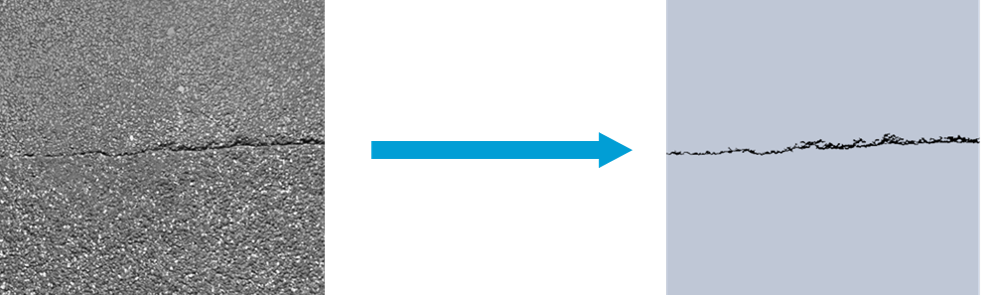

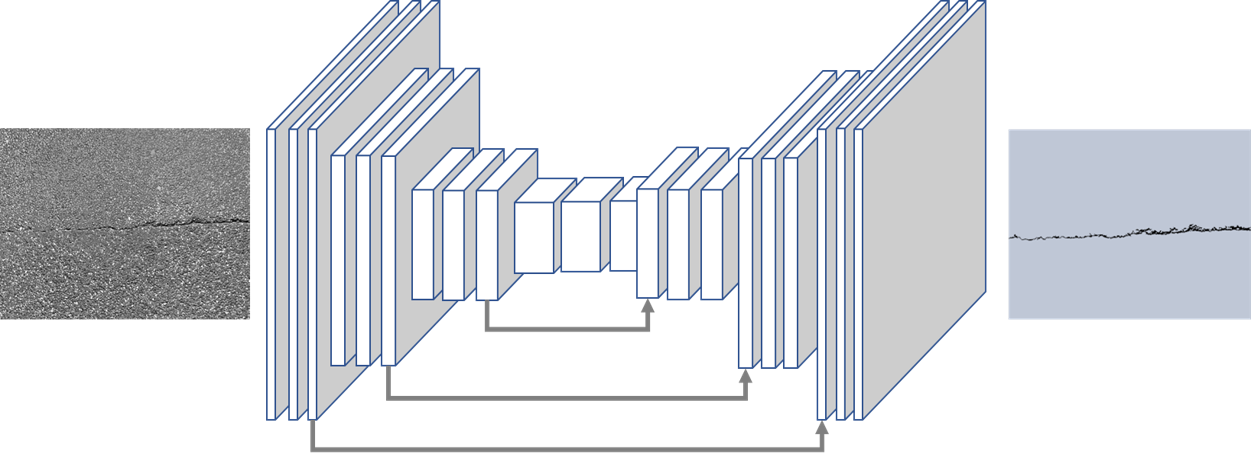

Segmentation is a general problem in computer vision. There are too many nice model architectures nowadays for segmentation, like FCN, UNet, DeepLab… I don’t have a reason to invent a new architecture and I think UNet is a pretty good starter for this simple task. I used a UNet with 3 down-sample layer(which is smaller than the original paper). The input is RGB image while the output is binary segmentation result with the same size as the input.

Now, next session is about data. Luckily, guys make some awesome dataset open-sourced, namely CrackForest and TTIS 2016. In order to make full use of these data, we generate small batches of data from the raw images using sliding window. So we have a significantly larger number of dataset right now which is sufficient for our deep learning model.

As for training, it is pretty naive. Use Adam, use cross-entropy, use cross-validation. Everything is smooth for me. I used PyTorch in implementation.

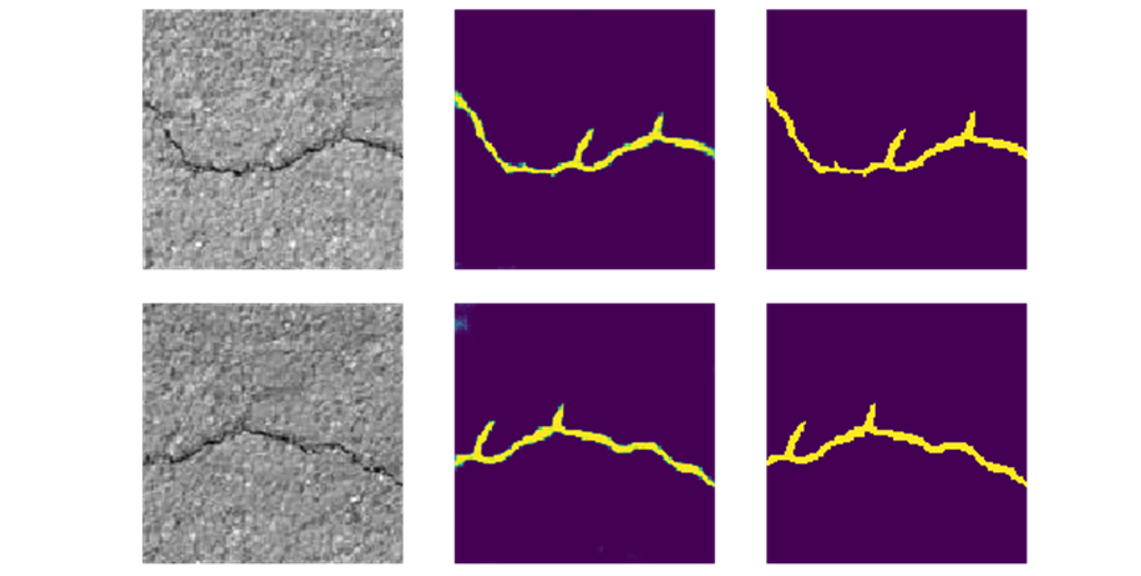

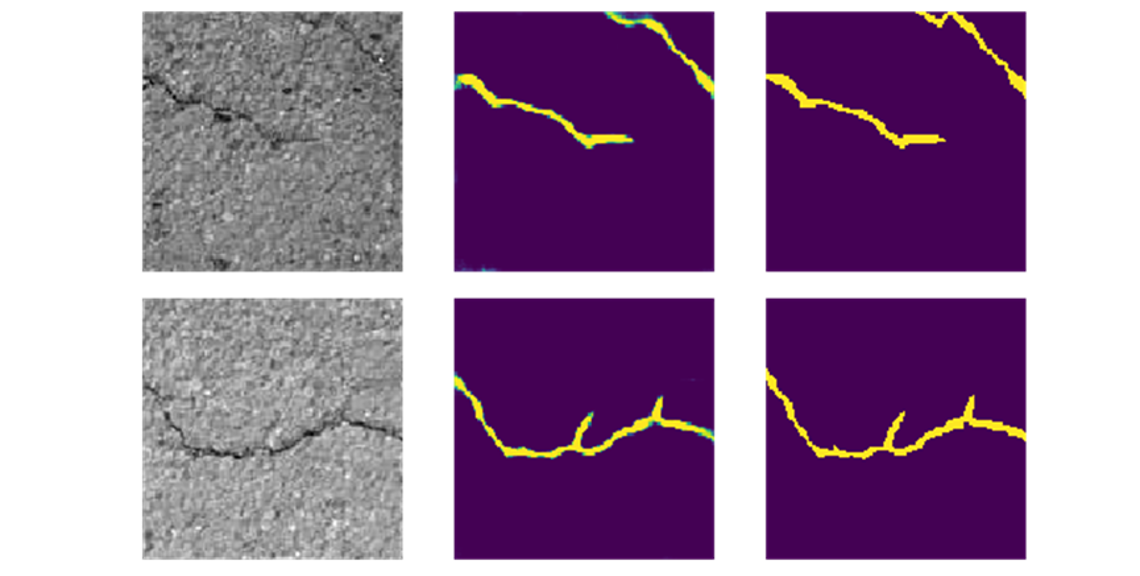

Testing, let’s see some figures directly. Left: image, mid: unet output, right: groundtruth.