Scrape Columbia CS Courses and Evaluation

Tools

- Scrapy: for web scrape

- ghostscript: for pdf text extraction

Get all courses information

Columbia uses a website called Vergil to help student plan their courses. So all courses information could be found on this website. First, check the structure of the web page.

When search COMS (CS department courses), the url is https://vergil.registrar.columbia.edu/#/courses/COMS .

In Chrome Developer tool Network panel, there is a json file which contains all results information of the query. Let’s check the url of this file. It’s from https://vergil.registrar.columbia.edu/doc-adv-queries.php?key=COMS&moreresults=2 . It seems key and moreresult are two options in this query and this website will return a json file containing all we need. So we could write a spider to do the rest.

For convenient, I only get two categories EECS and COMS. The json file has class info as well as the instructors. As I want to get the professors evaluation results from the university evaluation system, I extract the professor uni id (a unique identification code in Columbia).

The evaluation page looks like this:

Each link contains a pdf file of evaluation results. It would easier for us if there is a json file containing all links. Let’s have a check.

Great, there actually is one, and the name is the professor’s uni id. Link is https://vergil.registrar.columbia.edu/cms/cw/eval/eg2173 . The path key in json is what we need, the link to the pdf evaluation result. We could use a new scrawler to do all the jobs!

In order to see the evaluation results, we need to pass the CAS (Central Authentication Service) authentication. It is pretty easy. The login url is https://cas.columbia.edu/cas/login?service=ias-qmss&destination=https%3A%2F%2Fvergil.registrar.columbia.edu%2Fcms%2Fuser%2Fwind . Login first, scrape then!

Lastly, we have all links to evaluation result of all professors and all courses. What left is to download them. We also need to get through CAS first. A new scrawler could do it.

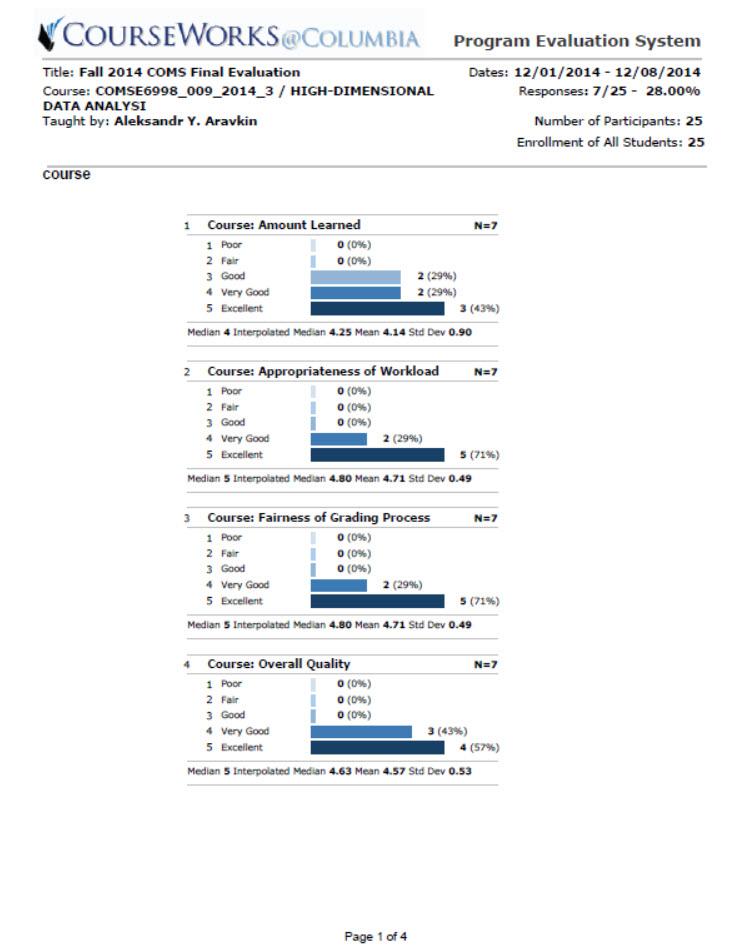

The pdf file looks like:

This is super unfriendly if we want to analysis number inside. Luckily we have some tools here. Ghostscript is one of the good choices.

1 | gs -sDEVICE=txtwrite -o output.txt input.pd |

This command could transform pdf files into txt. The result looks like:

1 | Program Evaluation System |

There is still many work to do before we could truly use these data. But I’d like to leave this work in the future.